Beethoven’s symphony was once mixed with Einstein’s speech to show that music could be separated from voice using simple mathematical tricks. The concept introduced MUSIC in radars in 1980s. MUSIC stands for multiple signal classification and is a signal processing algorithm routinely used in traffic radars and air traffic controllers to intercept the direction of an arriving cars and flights.

Several decades later MUSIC found its way into optical microscopes in the form of MUSICAL. It still does more or less the same – it still finds the direction of photons coming towards the camera of a microscope. However, MUSICAL further backtracks the photons to their origin so that biological sample can be imaged with nanometer precision about 3 times smaller than the capacity of optical microscope. Through this, MUSICAL turns ordinary microscopes into super-resolution microscopes.

But the photons are fast and busy people and all processes associated with them, such as fluorescence emission distributions, also occur pretty fast. High quality scientific grade cameras can now image them, but the MUSICAL algorithm needs to catch up in speed if microscopy users such as life scientists can benefit from the super-resolved images using ordinary lab microscopes. So, the 3D nanoscopy group applied for a patent to facilitate the process and is commercializing it with the help of Norinnova AS, the technology transfer office of UiT.

Where it all started

Nature Communication 2016

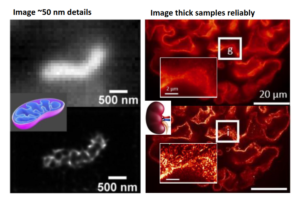

In 2016, Agarwal has published the first paper on MUSICAL, a new super-resolution imaging technique that provided relief from the tough conditions needed for super-resolution. It offers a minimum resolution of 50 nm, demands fewer frames and lower excitation power, and remains effective even when dealing with high fluorophore concentrations. Moreover, it is compatible with any fluorophore that exhibits blinking during the recording period. MUSICAL demonstrates performance on par with or superior to single-molecule localization techniques and contemporary statistical super-resolution methods.

Refer to the link: https://www.nature.com/articles/ncomms13752

Research seed for innovation

Horizon2020 funded MSCA-IF (2017-2019)

This project is the seed for transition of MUSICAL from research to innovation. It led to the first invention disclosure on MUSICAL, which eventually resulted in a patent application (https://norinnova.no/en/musical/). It also allowed to explore biological application cased for MUSICAL, including thin tissue sections and live cell imaging using MUSICAL. It also led to development of an imageJ plugin of MUSICAL

Publication link: https://opg.optica.org/boe/fulltext.cfm?uri=boe-11-5-2548&id=430113,

ImageJ plugin link: https://github.com/sebsacuna/MusiJ

Speckle

The proposed research is to integrate MUSICAL with chip-based nanoscopy system for completely bypassing the need of fluorescence blinking and consequently avoiding problems of photo-chemical toxicity without compromising spatio-temporal resolution. This innovation is possible because MUSICAL uses fluctuations of intensity, howsoever induced (blinking or otherwise), for generating super-resolved images. Complementary to this, waveguide chip made of high-refractive index material (n = 2) generates dynamically varying speckle-like illumination patterns with spatial frequencies higher than what can be achieved using far-field diffraction limited optics. This illumination will induce fluorescence intensity fluctuations needed by MUSICAL. The innovation will result into controlled system-based imaging, instead of less-controllable blinking-based imaging. Further, it will allow significantly large field-of-view (~mm2) with resolution of (150 – 200 nm) using low NA (0.2) collection objective lens because the spatial frequencies of chip based illumination remain the same irrespective of the collection optics .

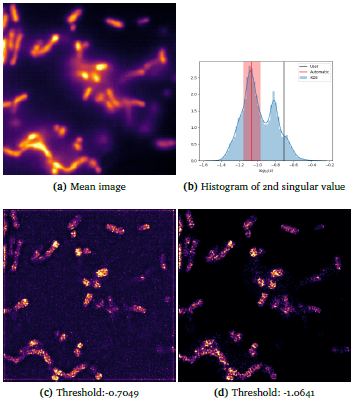

Auto Threshold

MUSICAL requires a threshold parameter that is provided by the user heuristically. Using a pattern recognition approach, the threshold can now be determined by Auto Threshold MUSICAL algorithm automatically. This makes MUSICAL more user-friendly and easy to use.

The link to Auto Threshold MUSICAL and a new nanoscopy dataset with results of autothreshold MUSICAL will be made available here after the publication of the results. This result is an outcome of the master thesis project of Sebastian Acuna.

Computational scalability established

Research Council of Norway’s Biotek project nano-Path (2019-2022)

In this project, Agarwal participated as a co-PI and established computational scalability of MUSICAL. It also showed that MUSICAL enables super-resolution on clinically-relevant large scale tissue nanoscopy. In a way, it established a proof-of-concept of both the technology and the application of MUSICAL.

Refer to link: https://prosjektbanken.forskningsradet.no/en/project/FORISS/285571

Refer to link: https://ieeexplore.ieee.org/document/9475068

Refer to link: https://www.nature.com/articles/s41377-022-00731-w

Refer to link: https://opg.optica.org/oe/fulltext.cfm?uri=oe-29-15-23368&id=453169

Towards minimum viable product

DLN Innovation Pilot Project (2022-2023)

The Center of Digital Life Norway (https://www.digitallifenorway.org/) has been working on increasing innovation in Norway (link 1 below). In 2022, it started its first DLN Innovation Pilot project where it chose 4 projects from all over Norway to foster innovation. MUSICAL was one of the proud selections. DLN funded ~1 MNOK for developing a minimum viable product of MUSICAL as an important step towards increasing the commercialization potential of MUSICAL.

Refer to: https://www.digitallifenorway.org/competence-areas/innovation/documents/2021.12.05—3290-to-be-report—final.pdf

Prospecting commercialization partners and market potential

Research Council of Norway’s Qualification fund (2023)

The Research Council of Norway funded a qualification project for MUSICAL to prospect the market potential and identify commercialization partner. Three potential commercialization partners have been identified and non-disclosure agreements have been signed. Relevant market segments have been identified. The path is paved for the next round of funding.

Refer to link.

Research Council of Norway makes super-resolution microscopy MUSICAL

Research Council of Norway’s Verification fund (2024)

Research Council of Norway decided to fund further 5 Million Krones (~0.45 Million Euors) for a verification project for MUSICAL. In this project, 3D Nanoscopy team at UiT The Arctic University of Norway will develop dedicated high speed hardware for MUSICAL and the technology transfer office at Norinnova will identify means of taking this invention to the society. MUSICAL will soon make a difference in how much our life scientists know about our health and diseases.

Live cell

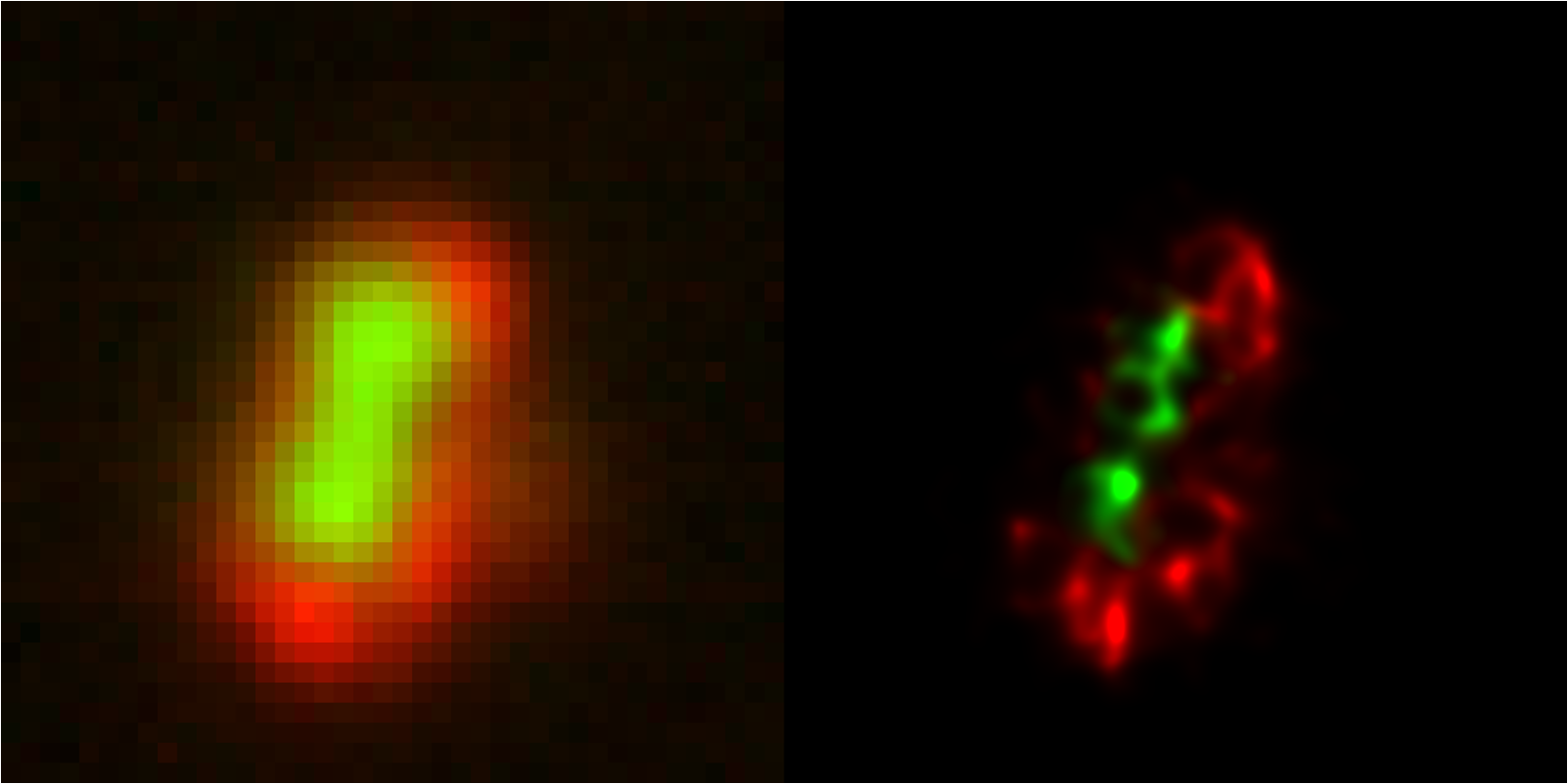

MUSICAL is a live cell friendly fluorescence nanoscopy technique supporting resolution upto 35 nm. Use your widefield microscope to obtain super-resolved image streams of live cells.

The salient properties of MUSICAL are:

- Requires low power in comparison to most super-resolution techniques, therefore it is less phototoxic and is especially well-suited for live cells.

- Requires very few image frames (50 – 200 are sufficient in most cases), therefore suitable for dynamic systems such as live cells.

- Compatible with any dye or fluorescent protein in theory. Tested on Alexa dyes, GFP, RFP, YFP, CMP, SirTubulin, SirActin, MitoTracker dyes, etc.

- Compatible with dense or sparse samples and uses natural fluctuations in fluorescent intensity. Tested for cells and tissues without using any special imaging buffer (i.e. redox solutions).

- Tested on a variety of cameras, objective lenses (0.4 NA 20X to 1.49NA 100X oil immersion), and multi-channel acquisitions (4 channels so far).

- Works with TIRF and epifluorescence x-y-t image stacks.

It’s available in an ImageJ repository as an easy-to-install and easy-to-use plugin.